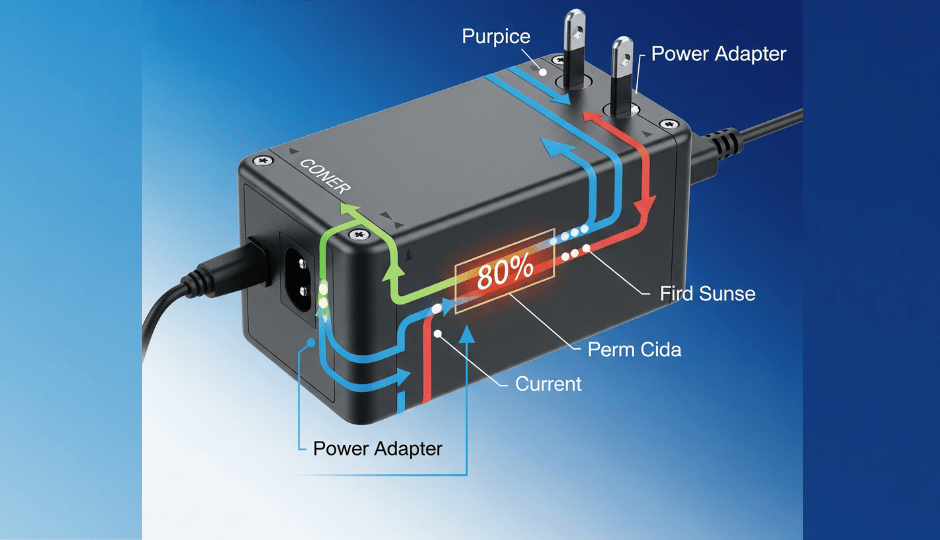

When your power supply fails early or your device behaves oddly, it might be because you’re unknowingly pushing the limits. Ever heard of the 80% rule? Here’s why it’s crucial.

The 80% rule means that a power supply should ideally operate at no more than 80% of its maximum rated capacity to maintain efficiency, reduce heat, and extend lifespan.

Ever wonder why your equipment heats up more than expected or your adapter dies too soon?

This is often linked to ignoring the 80% rule. Overspecifying or underspecifying power needs creates problems. This guideline helps ensure that your device runs within a safe and efficient operating range, especially under continuous use. In my experience working with design teams, respecting this rule always pays off in fewer customer complaints and better durability.

How Do You Calculate the 80% Rule in Real Scenarios?

Power problems often start with one mistake—choosing a power supply that just meets your device’s needs.

To apply the 80% rule, divide your device’s power consumption by 0.8. This gives you the minimum recommended power supply wattage. For example, a device that draws 80W should pair with at least a 100W power supply.

Let me break this down with a simple example from one of our client projects. The client was designing a consumer-grade tablet dock that required 48W of power. Initially, they chose a 48W adapter. It worked fine in lab tests, but failed in hot ambient conditions during real-world use. After applying the 80% rule and switching to a 60W adapter, the failure rate dropped to nearly zero. This kind of proactive design can avoid costly recalls or warranty replacements.

Here’s how to do the math:

80% Rule Formula

| Device Power Need (W) | ÷ 0.8 | = Minimum Adapter Wattage |

|---|---|---|

| 48W | ÷ 0.8 | = 60W |

| 72W | ÷ 0.8 | = 90W |

| 120W | ÷ 0.8 | = 150W |

Use this chart during your design phase. This rule is especially important for engineers like David, who face pressure balancing performance and cost.

Why Is Staying Below 80% Capacity Better for Reliability?

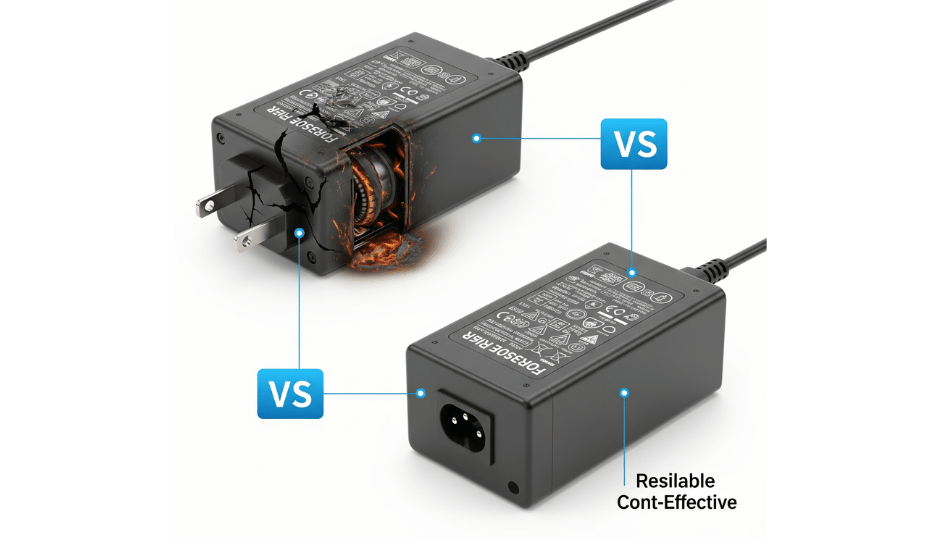

When things run at full capacity all the time, they wear out faster.

Staying below 80% load reduces internal heat, increases energy efficiency, and prolongs the lifespan of both the power adapter and the end device.

Heat is the enemy of electronics. I’ve seen this firsthand during field tests. Once, we supplied a 90W adapter to a kiosk maker for their signage units. The signage only drew 85W. On paper, it matched. But after a few months of summer usage, over 30% of the units failed due to overheating. We later replaced them with 120W adapters, and failures dropped to under 1%.

There’s another reason this matters—heat affects compliance[1]. Excessive heat may cause your product to fall out of line with energy efficiency[2] or safety standards like DOE VI or CE, putting you at risk legally and financially.

Here are some side effects of ignoring the rule:

Risks of Operating Above 80% Load

High internal temperature → Shorter component life

Less margin for current surges or spikes

Audible noise from internal fans or components

Power efficiency drops significantly

Greater chance of non-compliance in safety tests

For companies shipping globally, like the one David works at, this could mean delays in certification or added cost from redesigns.

How Does the 80% Rule Affect Cost Optimization?

It’s tempting to cut costs by matching the adapter wattage to the device exactly. But that can backfire.

Using the 80% rule means spending slightly more upfront on a higher-rated power supply, but it lowers the total cost of ownership by reducing failures and returns.

Many purchasing managers push back on this idea at first. One client we worked with insisted on using 24W adapters for a 22W draw medical device. After deployment, they started seeing thermal shutdowns and battery charging failures in the field. The cost of servicing those failures far exceeded the minor upfront cost of upgrading to 30W.

Let’s visualize the trade-off:

Cost Comparison: 100W vs. 125W Adapter

| Spec | 100W Adapter | 125W Adapter |

|---|---|---|

| Unit Cost (Estimate) | $5.00 | $6.25 |

| Load for 90W Device | 90% | 72% |

| Failure Rate (Est.) | 8% | <1% |

| Return Handling Cost | $15/unit | $0 |

| Total Cost per 1,000 pcs | $20,000 | $6,250 |

When calculated in full, the slightly more expensive adapter ends up saving thousands. This matters when you’re producing at scale and can’t afford surprises in the supply chain.

Should You Always Apply the 80% Rule?

Some exceptions exist. But not many.

In general, always apply the 80% rule unless the power supply is in a highly controlled environment or is only used for short bursts.

Sometimes in prototyping, we test devices at 100% load. But that’s not the same as long-term use. Heat cycles and real-world conditions like power spikes or poor ventilation take a toll.

There are a few cases where the rule may be relaxed:

- Lab-only or demo setups

- Environments with active cooling (like internal fans)

- Devices that operate at peak load only occasionally

But if your product will run 24/7 or live inside a tight casing without airflow, I’d never recommend cutting it that close. In real-world design, margin isn’t just a safety net—it’s good engineering practice.

Conclusion

Using the 80% rule for power supplies improves device reliability, reduces heat, and saves long-term costs. It’s a simple but powerful principle that every designer should follow.

[^1]: Explore compliance standards to avoid legal and financial risks in your electronics manufacturing process.

[^2]: Learn how energy efficiency standards affect product design and compliance, ensuring your products meet legal requirements.